You can still download previous versions from the download page.

ayaname

Recent community posts

The latest real-life model doesn't seem to match the 3.1 model in quality.

Here's a comparison of 2X interpolation from 24fps to 48fps:

- Original clip

- Real-Life Model - Quality High (Rife-App 2.8)

- Real-Life Model - [Old] 3.1 (Rife-App 2.7)

- Topaz Video Enhance AI Chronos v2 model

Go to around 1 minute. The parts that show the differences in quality:

- Hand hitting the bell

- Twirling around after coming through the door

- Shimmering of the escalator

Topaz Video Enhance AI seems to produce the best results, but it's significantly slower than Rife-App while using less VRAM.

Personally, I chose RIFE-App because:

- When I used Flowframes a few months ago to interpolate high resolution video (4k to 8k, especially VR), it required a MASSIVE amount of disk space (not RAM or VRAM, hard disk). Like hundreds of gigabytes. RIFE-App doesn't have this problem.

- RIFE-App's profits are shared with the creator of the RIFE algorithm.

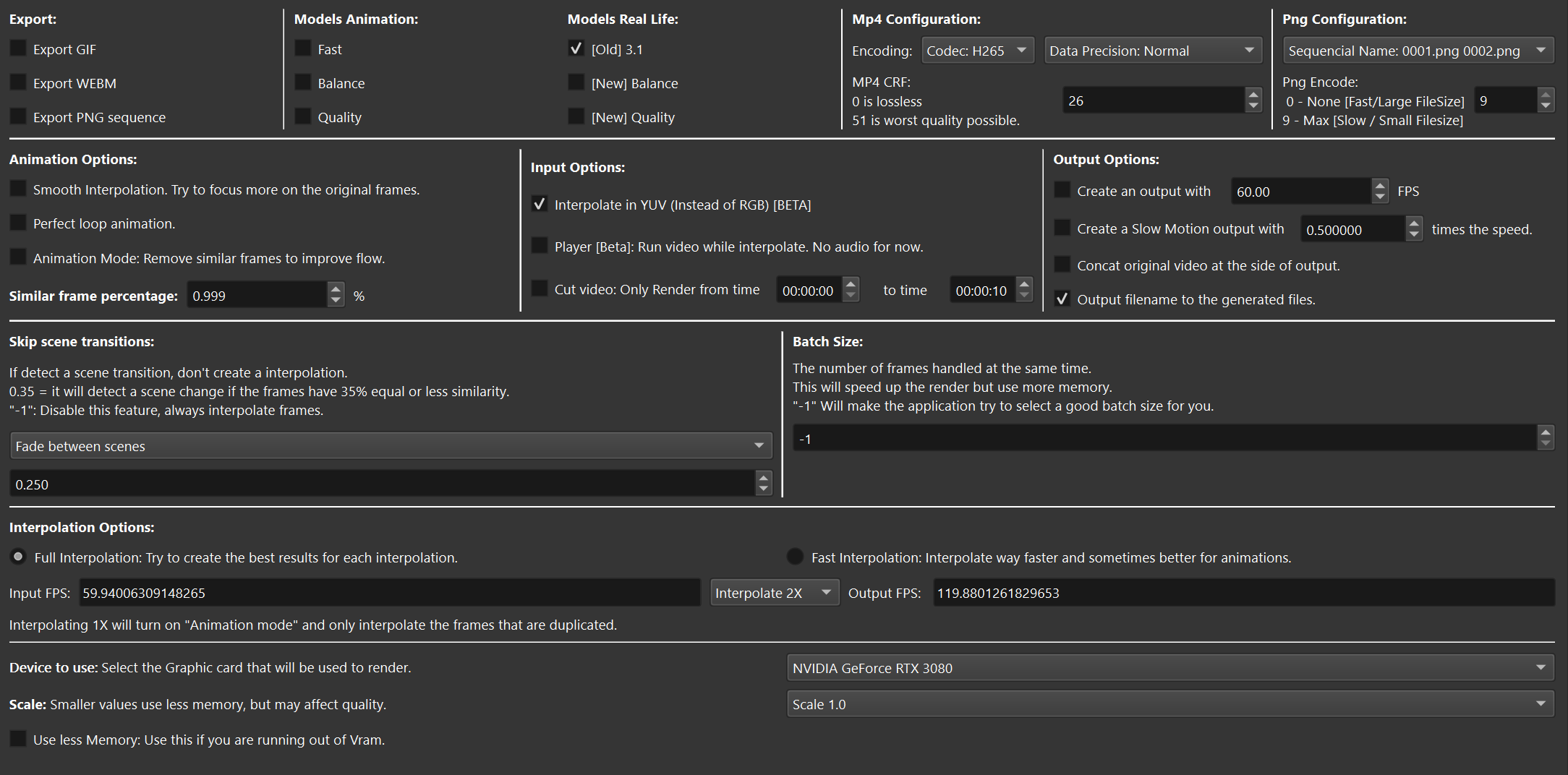

I tried interpolating in RGB (turned off "Interpolate in YUV") to avoid the broken pipe error posted below. It seems to work, no crashing error. However, the resulting video flickers a lot with black frames inserted. It looks like this:

https://gfycat.com/validdependentdove

Here are small clips to illustrate how the interpolations in RGB have flickering, and no flickering in YUV.

The crash_log.txt is empty.

I ran into this same error with another video file.

Using Benchmark: True Batch Size: -1 Input FPS: 23.976023976023978 Use all GPUS: False Render Mode: 0 Interpolations: 2X Use Smooth: 0 Use Alpha: 0 Use YUV: 1 Encode: libsvtav1 Device: cuda:0 Using Half-Precision: True Resolution: 3840x2160 Model: rl_medium Scale: 0.5 Using Model: rl_medium Selected auto batch size, testing a good batch size. Setting new batch size to 1 Resolution: 3840x2160 RunTime: 2716.950000 Total Frames: 65142 0%| | 2/65142 [00:06<70:21:19, 3.89s/it, file=File 0]------------------------------------------- SVT [version]: SVT-AV1 Encoder Lib v0.8.6-72-gec7ac87f SVT [build] : GCC 10.2.0 64 bit LIB Build date: Feb 7 2021 13:13:12 0%| | 3/65142 [00:07<39:40:05, 2.19s/it, file=File 1]------------------------------------------ Number of logical cores available: 24 Number of PPCS 53 [asm level on system : up to avx2] [asm level selected : up to avx2] ------------------------------------------- SVT [config]: Main Profile Tier (auto) Level (auto) SVT [config]: Preset : 7 SVT [config]: EncoderBitDepth / EncoderColorFormat / CompressedTenBitFormat : 10 / 1 / 0 SVT [config]: SourceWidth / SourceHeight : 3840 / 2160 SVT [config]: Fps_Numerator / Fps_Denominator / Gop Size / IntraRefreshType : 48000 / 1001 / 49 / 2 SVT [config]: HierarchicalLevels / PredStructure : 4 / 2 SVT [config]: BRC Mode / QP / LookaheadDistance / SceneChange : CQP / 16 / 0 / 0 ------------------------------------------- 12%|██▍ | 7969/65142 [53:28<3:52:33, 4.10it/s, file=File 7967]Exception ignored in thread started by: <function queue_file_save at 0x000002F3FC0D2A60> Traceback (most recent call last): File "my_DAIN_class.py", line 805, in queue_file_save File "my_DAIN_class.py", line 714, in PipeFrame BrokenPipeError: [Errno 32] Broken pipe

Ran into this error during interpolation. Do you know what's wrong?

Exception ignored in thread started by: <function queue_file_save at 0x000002867E112A60> Traceback (most recent call last): File "my_DAIN_class.py", line 805, in queue_file_save File "my_DAIN_class.py", line 714, in PipeFrame BrokenPipeError: [Errno 32] Broken pipe

Full logs:

Using Benchmark: True Batch Size: -1 Input FPS: 23.976023976023978 Use all GPUS: False Render Mode: 0 Interpolations: 2X Use Smooth: 0 Use Alpha: 0 Use YUV: 1 Encode: libsvtav1 Device: cuda:0 Using Half-Precision: True Resolution: 3840x2160 Model: rl_medium Scale: 0.5 Using Model: rl_medium Selected auto batch size, testing a good batch size. Setting new batch size to 1 Resolution: 3840x2160 RunTime: 2453.803000 Total Frames: 58832 0%| | 2/58832 [00:06<59:02:21, 3.61s/it, file=File 0]------------------------------------------- SVT [version]: SVT-AV1 Encoder Lib v0.8.6-72-gec7ac87f SVT [build] : GCC 10.2.0 64 bit LIB Build date: Feb 7 2021 13:13:12 ------------------------------------------- Number of logical cores available: 24 Number of PPCS 53 [asm level on system : up to avx2] [asm level selected : up to avx2] ------------------------------------------- SVT [config]: Main Profile Tier (auto) Level (auto) SVT [config]: Preset : 7 SVT [config]: EncoderBitDepth / EncoderColorFormat / CompressedTenBitFormat : 10 / 1 / 0 SVT [config]: SourceWidth / SourceHeight : 3840 / 2160 SVT [config]: Fps_Numerator / Fps_Denominator / Gop Size / IntraRefreshType : 48000 / 1001 / 49 / 2 SVT [config]: HierarchicalLevels / PredStructure : 4 / 2 SVT [config]: BRC Mode / QP / LookaheadDistance / SceneChange : CQP / 15 / 0 / 0 ------------------------------------------- 53%|████████▌ | 31293/58832 [3:24:44<2:00:26, 3.81it/s, file=File 31291]Exception ignored in thread started by: <function queue_file_save at 0x000002867E112A60> Traceback (most recent call last): File "my_DAIN_class.py", line 805, in queue_file_save File "my_DAIN_class.py", line 714, in PipeFrame BrokenPipeError: [Errno 32] Broken pipe

Looks like "[New] Balance" with Scale 0.5 got rid of the vertical judder. ("[New] Quality" ran out of VRAM even with Scale 0.5.)

Interpolated Clip (New Balance Scale 0.5)

I can't seen to be able to run the file, even on VLC.

Hmm, not sure why. I tried VLC and it worked. What about PotPlayer?

Tested turning off "Interpolate in YUV". The results are even worse, with black block artifacts: Interpolated Clip in RGB

From my testing with VR videos, the Nvidia RTX 3080 (10GB VRAM) can barely handle 5K (ex. around 5100x2550). Anything higher crashes due to running out of VRAM. Note that I had to set Data Precision to Above Normal. Data Precision Normal led to unacceptable artifacts.

I tried the "save memory" option but it didn't seem to help much.

Thank you for this magical and easy to use technology!

I run an RTX 3080 GPU on Windows 10, so I installed Python 3.8.6 and other dependencies following the instructions here.

Important: Ampere GPUs perform worse than they should on cuDNN 8.04 and older. If your cuDNN version is not >=8.05, you can manually update it by downloading it from Nvidia and replacing the DLLs in the torch folder. The embedded runtime already includes those files.

I'm stumped by this note regarding cuDNN though. How do I check what cuDNN version my Python install has? And how do I update cuDNN? I'm not sure what files to look for and where to look.

Thanks again!

Found this app which supports DAIN as well as a different algorithm RIFE:

https://github.com/n00mkrad/flowframes

Maybe it doesn't have the same memory leak issue? Also, RIFE seems really interesting given its lower VRAM requirements and higher efficiency.

Hopefully these two amazing projects can benefit each other!

ELI5 explainer video for DAIN and RIFE:

I'm running into this RAM memory leak problem also. I have 32GB of RAM.

Where can I download DAIN-APP 0.48? The original download link from https://www.patreon.com/posts/dain-app-0-48-43528108 is no longer working.

I've confirmed the following with 0.43:

At first I thought 0.43 had the same issue with interpolation not starting. GRisk, you were right that when I uncheck "Don't interpolate scene changes", everything starts normally.

Then, I realized that "Don't interpolate scene changes" actually does work if I wait long enough. With that setting enabled, it took hours after PNG frame extraction completed before interpolation started. With "Don't interpolate scene changes" disabled, interpolation starts immediately.

@GRisk

How should I interpret the "Detection sensitivity" field? The default is 10. Does a higher number mean more sensitive or less sensitive to scene changes? Do you recommend we stick with the default of 10?

For "real life" videos, do you recommend we always enable "Don't interpolate scenes changes"?

What does the "Verify Scenes Changes" button do? When I click it, DAIN-APP shuts down (crashes?).

Thank you and the DAIN team for this amazing technology and app. It's like magic!